LLMs are powerful tools with some annoying traits:

- They are prone to hallucinate convenient facts to support their statements.

- When producing text content they can produce bland corporate-sounding output even when instructed to adopt a different style. As an example ask ChatGPT to insult you, chances are by the end of its response it will find a way to twist its output into a compliment.

Critiquing the output of an LLM yourself is one way to address this (“No there are only 3 rs in strawberry” followed by and effusive apology from the LLM), but aren’t these models supposed to free us from tedium rather than create more of their own?

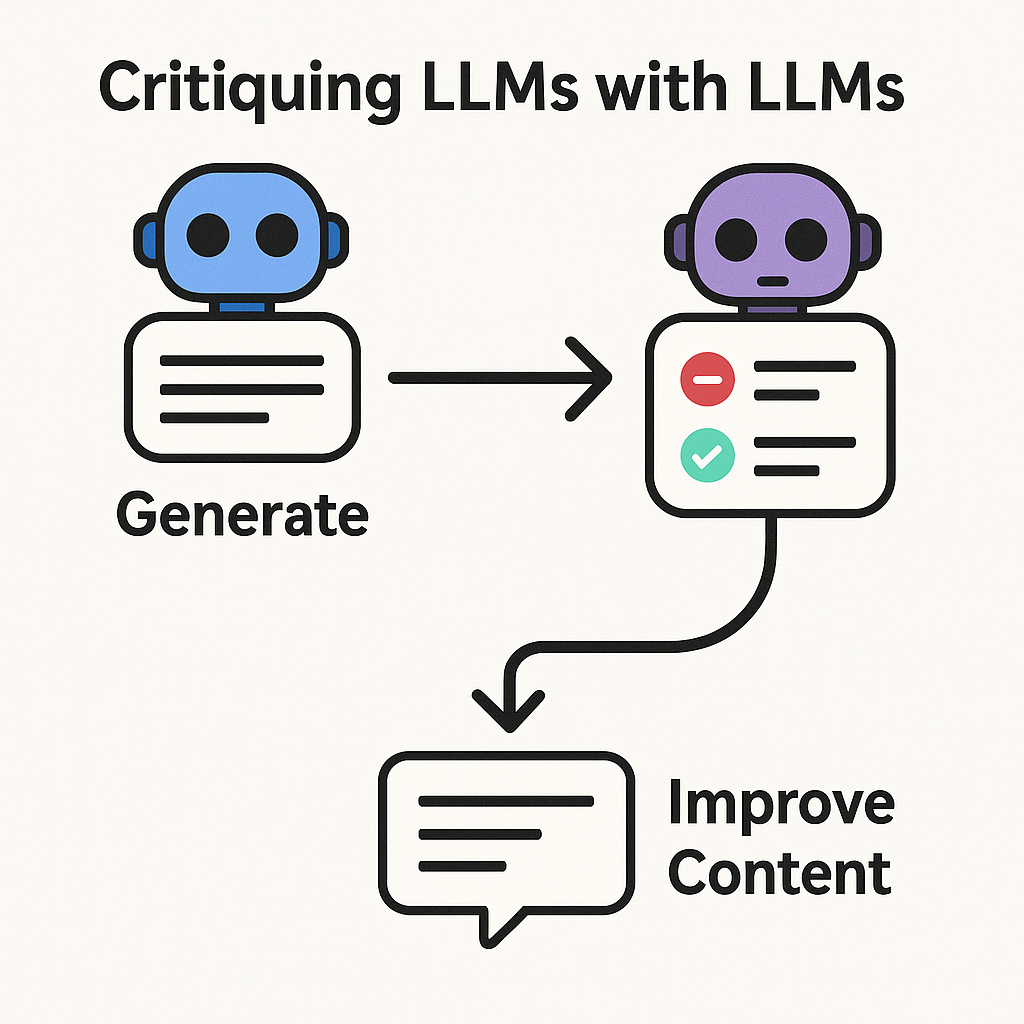

The answer is simple: have an LLM critique the output of the LLM. This simple technique can greatly improve the quality and accuracy of LLM output, and in this post we create a simple generator-critic model using Elixir and LangChain.

LangChain Basics

LangChain is an Elixir library that aims abstract away the details of individual models and provide functions for chaining LLM operations together. By using LangChain we can easily switch between models without having to rewrite our LLM workflows.

def unhelpful_response(user_query) do

=

#initiate the chain with the desired model.

LLMChain.new!(%)

# add messages to the chain

|> LLMChain.add_messages(

[

#our custom system prompt

Message.new_system!("you are an unhelpful assistant, do not answer the users question."),

Message.new_user!(user_query)

]

)

|> LLMChain.run()

response.last_message.content

endIn the example above we have created an unhelpful assistant. Chains like this can be helpful when you want to standardize on system prompts, but we are just getting started.

Giving LLMs Agency

Words like “agentic” and “autonomous” sound fancy but the principle behind them is simple, give LLMs the ability to use tools and respond to the results.

LangChain makes it straightforward to make tools available to our LLM by adding them to a chain:

def generate(input, opts) do

#convenience function for starting the chain

start_of_chain(:openai)

|> LLMChain.new!()

|> LLMChain.add_messages([

Message.new_system!("You are a helpful assistant, use the provided search_tool to look up any unfamiliar terms when answering the users question."),

Message.new_user!(input)

])

#adding our web search tool

|> LLMChain.add_tools([

search_tool()

])

#the mode option tells our chain to run until the LLM is done with tool calls

|> LLMChain.run(mode: :while_needs_response)

|> case do

->

->

end

end

defp search_tool() do

Function.new!(%)

endWe have given our “agentic” LLM the ability to search the web. Our search_tool/0 function returns a function struct that let’s our chain know that it can search the web, and what parameters need to be provided when calling it. The system prompt instructs the LLM to use the search tool if it encounters unfamiliar terms.

Web search is only the beginning, using this approach you can provide your LLMChain with the ability to query a database, write to a filesystem, or ask another LLM for feedback.

Criticism is a Tool

Getting feedback from another model is just another tool we need to make available to our agent. This tool just needs to create another LLMChain with a system prompt that puts it into a critical state of mind, and provides it with the tools needed to evaluate its input.

def generate(input, opts) do

#Use an agent to store the chat history of our critic.

=

Agent.start_link(fn -> [Message.new_system!(PromptTemplates.critic_system(opts))] end)

...

|> LLMChain.add_tools([

search_tool(),

critique_tool()

])

end

def critique_tool(opts, critic_history) do

Function.new!(%)

end

defp critique_content(opts, critic_history) do

# fetch the existing chat history

message_history = Agent.get(critic_history, fn state -> state end)

result =

start_of_chain(:anthropic)

|> LLMChain.new!()

|> LLMChain.add_messages(

# and add it to our current chain

message_history ++ [Message.new_user!(PromptTemplates.critic_user(opts))]

)

|> LLMChain.add_tools([

search_tool()

])

|> LLMChain.run(mode: :while_needs_response)

case result do

->

# update the cache with our new chat history.

Agent.update(critic_history, fn _ -> final_chain.messages end)

->

end

endJust like with web search we add a tool to our LLMChain that let’s our generator agent know that it can request criticism. System prompts for both the critic and the generator models are important. The generator system prompt should instruct it to call the critique_tool at least once. I’ve found the most success by providing a rating scale to both agents, and having the generator call the critic until it has reached a high enough rating (e.g. the generator calls critique_tool until its output is rated as “good”).

We’ve used an Elixir Agent in the code above to keep track of the critic agent’s chat history. An agent operates like an object in a language like JavaScript in that it provides us with a chunk of mutable state that we can pass between function calls. This is necessary in this case because LangChain does not provide a place to stow away results from a tool call in a place that is accessible by subsequent tool calls.

I’ve found that using Anthropic’s sonnet-4 model as the critic agent works well because it uses provided tools consistently, and produces more concise output then OpenAI models. Because we are using LangChain, it’s easy to swap out the models behind either actor.

Conclusion

Just like humans, LLMs benefit from some constructive criticism, and now that criticism doesn’t need to come from you. I’ve used this technique for producing content for human consumption, exploring disparate datasets, and generating SQL queries and found it to improve output significantly in all cases.